Lab 12: Path Planning and Execution

7 minutes read •

Overall

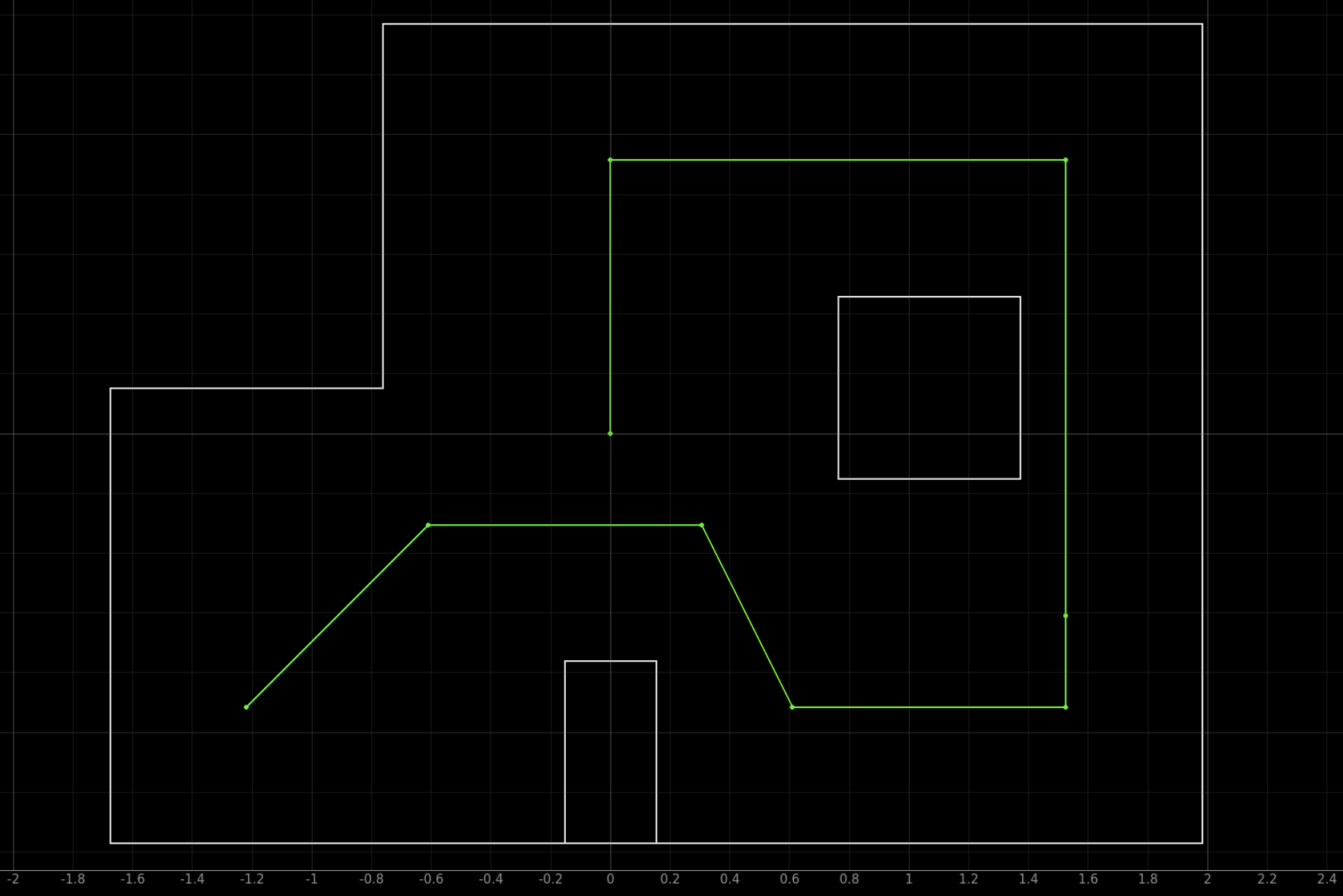

I worked with Trevor and Jack to navigate our robot through a preset route of waypoints assigned to us in the lab (shown below). Overall, we were very successful in arriving and navigating through these waypoints completely autonomously with a combination of on-board PID control and off-board localization processing! The final result was incredibly rewarding as it required us to combine and implement various aspects of code and hardware debugging learned throughout the semester in addition to navigation and waypoint logic.

We began with complementary arrays of waypoints and localization booleans (i.e. a point, and a boolean to signal the script to localize at that waypoint). This allowed us to manually assign which points we localized at based on the accuracy of localization at each waypoint for the most accurate navigation. To navigate between points, we used sequential orientation and position PID using the DMP onboard our IMU, and Kalman-filtered TOF sensor, respectively.

We used my robot for this lab, and collaborated jointly on both Arduino and Python code components!

Python Implementation

Python Code

Below is the core logic and control flow for our entire lab. It is responsible for calling all functions and Arduino commands. The python code is explained in length below and all Arduino commands are covered in the next section.

# return current location, current orientation

=

await

# Run Update Step

=

= 3.281* # x

= 3.281* # y

#assume we are at the waypoint

= #set current location to x,y of the current waypoint

return

# based on current location, calculate and return required angle

=

=

=

=

return

#Implement logic

=

=

=

= *304.8 #convert from ft to mm

= -

return

# Reset Plots

# Init Uniform Belief

=

= # encode whether to localize at a waypoint

= #initialize starting loc as the first waypoint

= 0 # [mm]

# i represents current waypoint (starts at zero)

=

# P|I|D

await

await

=

= .

=

# P|I|D

await

= await

Core Logic and Control Flow

The navigation path is defined by a list of 2D waypoints waypoint_list, with each point representing a target location in feet. An accompanying list of boolean flags localize_flags determines whether the robot should re-localize its position at each waypoint using a grid-based localization system.

The script continuously loops through all waypoints in sequence, executing the following steps for each:

Turn Toward the Next Waypoint:

- Calculates the angle between the robot’s current position and the next waypoint using the

atan2function. - Sends a PID control command over Bluetooth to rotate the robot to the calculated heading (

CMD.PID_TURN_CONTROL, described in artemis section).

- Calculates the angle between the robot’s current position and the next waypoint using the

Read Current TOF Sensor Data: Requests the current pose from the robot (

CMD.SEND_CURRENT_POSE, described in artemis section).- Extracts the distance reading from the CSV.

Move Toward the Waypoint:

- Computes the expected distance to the next waypoint based on current position belief.

- Subtracts the distance to the next point from the current TOF reading to calculate the necessary target distance for the next motion.

- Sends a PID control command over Bluetooth to move the robot forward to the calculated target. (

CMD.PID_CONTROL, described in artemis section)

Update Robot Location:

- If localization is enabled for the current waypoint, the robot turns to zero degrees and then performs an observation scan (function

perform_observation_loop()from Lab 11) and sends the data through BLE. Then, an update step is performed to estimate the robot’s current position. - If localization is disabled, the robot’s position is assumed to be exactly at the waypoint.

- If localization is enabled for the current waypoint, the robot turns to zero degrees and then performs an observation scan (function

Arduino Implementation

Note that all data sent over from the Artemis to the computer over BLE is processed and piped into a CSV file by a notification handler. This CSV file is reset at specific points throughout the code before new ToF and Gyro data comes in.

CMD.PID_TURN_CONTROL

This case is responsible for using orientation PID control to arrive at a desired target angle.

// Get PID parameters over BLE

success = robot_cmd.;

if return;

// Same for Kd & Ki

...

success = robot_cmd.;

if return;

tx_estring_value.;

// Variables used for cutoff timer (5 s)

...

int turn_cutoff = 5000;

while

;

pidTurn_start = 0;

;

break;

Initially, an arrived boolean flag (triggered when the robot arrived within a threshold of the target angle) was used to end the PID control. However, this meant that if we slightly overshot the target, the flag would still say that we arrived as we did pass the target. At this point, the robot has overshot but the arrived flag is true and the PID control will no longer run. We tried to severly overdampen the system to fix this, however it left us with too much steady state error. Our solution was implementing the 5 second cutoff timer before stopping the PID; this gave us plenty of time to approach angles and eliminated the issue of early PID stoppage. The getDMP() and RunPIDRot variables are explain in great detail in lab 6. The clearVariables() function is seen in all cases used in this lab; it clears all varaibles in the entire arduino script at the end of cases so the next case is ready to run without any memory overflow.

CMD.SEND_CURRENT_POSE

This case is responsible for sending a ToF reading to the computer over BLE to help inform the distance needed to travel.

distanceSensor1.;

while

;

// Send Data

tx_estring_value.;

tx_estring_value.;

tx_estring_value.;

tx_characteristic_string.;

;

break;

collectTOFis explained in many previous labs; it is responsible for collecting a TOF value and appending it to the TOF1 array.

CMD.PID_CONTROL

This case has a similar purpose to CMD.PID_TURN_CONTROL, except it is responsible for linear PID control rather than rotational.

distanceSensor1.;

pid_start = 0;

// Get PID parameters over BLE

success = robot_cmd.;

if return;

// Same for Kd & Ki

...

success = robot_cmd.;

if return;

tx_estring_value.;

// Variables used for cutoff timer (7 s)

...

int linear_cutoff = 7000;

while

;

;

run = 0;

break;

runPIDLin() is the same function used in Lab 7 for integration of Kalman filtering onto the robot; it is responsible for running the linear PID control and is explained in Lab 7 and 5. Just as in orientation PID control, a cutoff timer is used to eliminate the early ending of PID control that occurs with am arrived flag.

CMD.SPIN

This command is called in the perform_observation_loop() function and is responsible for localization gyro and ToF data collection; it is explained in lab 11. The only change is the swapping out of an arrived flag for a timer just as for orientation and linear PID cases.

Results

Below are a series of trials from our lab! In the last two runs you can see a live belief map and logging in jupyter notebook which ouputs critical information such as if we are localizing at that specific waypoint, our current belief pose, our calculated target heading, etc. To try and speed up our runs, we did not localize at every point since our waypoint setting algorithm and PID control was fairly good at getting us between shorter distances.

Early Run

We saw much success with the structure of our high-level planning on the first run, but ran into trouble with inconsistencies in lower level control and angle targeting. When we localized, our planner output was correct based on position belief, but we struggled to tune the orientation PID loop in such a way that it responded (similarly) well to changes in angle between 10 and 180 degrees, while settling within a reasonable time. We changed our approach to add a time cutoff to both control loops, sacrificing a bit of accuracy for longer-term operation.

Nearly There

During this run we had to nudge robot at (-5,-3) due to a ToF underestimation. Also, the derivative term blew up at (5,3) while localizing so we had to manually face it at the temporary target angle. Also apologies as we dont scroll down on the jupyter lab script until later in the video so some of the outputs are hidden.

Final Run!

It was awesome to see everything from the semester really come together perfectly during this run! You can see the localization do a great job of correcting for ToF underestimating and overall noise; coordinate (5,3) is a great example of this where it is short of the marker but accounts for it by angling slightly upward to approach the next waypoint where it then arrives with complete precision.

Collaboration

Thank you to all the course TAs for all of the time and support during this entire semester! I worked completely with Jack Long and Trevor Dales. ChatGPT was used for checking specifics of implementing math functions in python.